Recently, at WWDC, Apple announced their venture into the world of augmented reality. If you’re wondering what exactly augmented reality, or “AR” is, it’s similar to virtual reality but instead of creating a completely virtual world for a user to experience, AR takes the real world, as captured by your camera, and places virtual elements into it – a la Snapchat face filters or Pokemon GO.

Recently, at WWDC, Apple announced their venture into the world of augmented reality. If you’re wondering what exactly augmented reality, or “AR” is, it’s similar to virtual reality but instead of creating a completely virtual world for a user to experience, AR takes the real world, as captured by your camera, and places virtual elements into it – a la Snapchat face filters or Pokemon GO.

Apple supports AR in their latest phones with a new framework known as ARKit. This framework will allow developers to create some intriguing experiences through either SceneKit (which uses 3D models), SpriteKit (which uses 2D sprites), or Metal (which allows for custom rendering).

Building our first ARKit app

We at LunarLincoln thought this would be a great opportunity to explore the possibilities of AR. However, before we could do that we had to have the right tools which meant we had to upgrade to the new Xcode 9 beta and have a device running iOS 11 with at least an A9 chip which comes in the iPhone 6S and up.

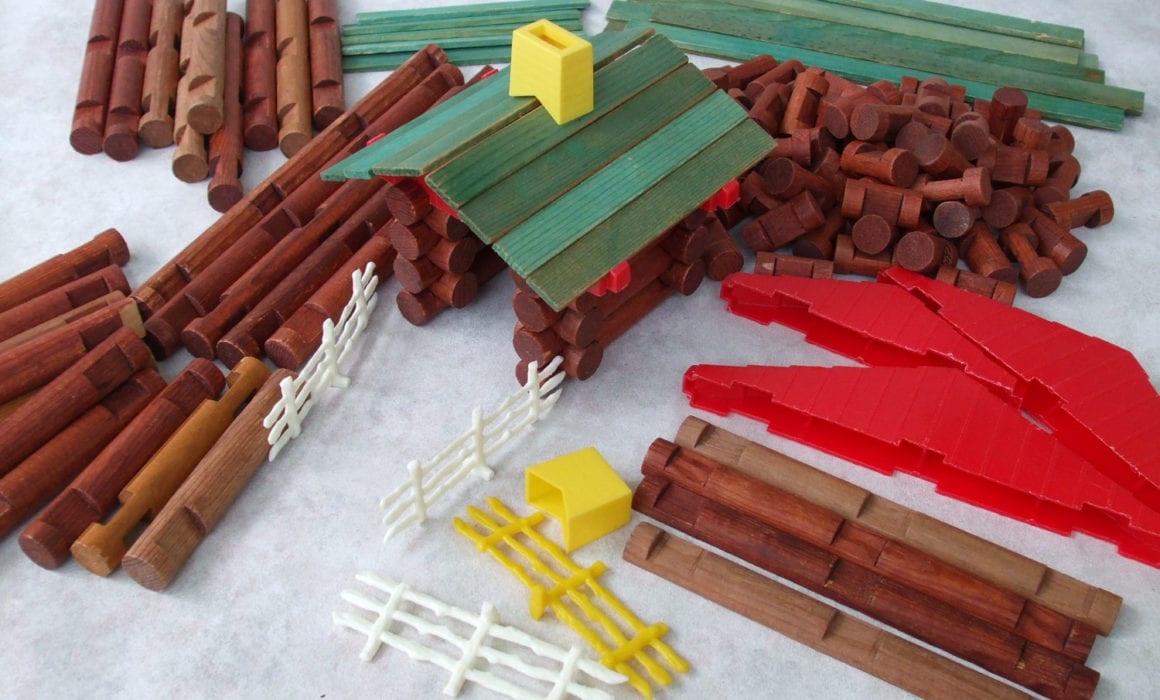

Once we knew we wanted to do something with ARKit we needed to brainstorm some ideas. What would be a cool thing to throw into the real world, and what are the limitations of the software? A lot of time was spent scrolling through MadeWithARKit to see how people were using it.

The primary application of ARKit early on was creating interesting experiences such as showing a virtual rocket land on your pool, or a robot dancing in your living room. The other side of it came in the form of practical tools such as a virtual tape measure, or being able to place furniture in a room to see if it would look good or fit.

To get a feel for ARKit, our app originally started as an app where you could tap on the ground and a 3D model of some sort would appear. This was a nice idea and gave us a feel for the logic and programming behind ARKit, but it was boring.

The idea was presented to make an item rain from the sky. What if we applied the same logic where we tapped on the screen and something appeared, but instead of some boring 3D model, it was a rain cloud that showered money? Yaaaaaas.

The Make it Rain concept

We would use SceneKit and ARKit together to create a cloud wherever, and then let it rain money that would then pile up on the ground.

To get this app working the way we wanted we had to read quite a bit about one of the staples of ARKit: plane detection. ARKit uses feature points and plane anchors to find surfaces and mark them in 3D space. Once ARKit has gotten us this information, we can use that position to simulate the ground by placing a physics body there so that as the objects are falling from the cloud they will smack into the ground and stop. SceneKit physics controlled the falling and bouncing once objects hit the ground in addition to making a body dynamic with the appropriate category and collisionBitMasks filled out.

Looking at this app now, most of the learning and code came from SceneKit. ARKit, itself, was fairly easy to use. The worldtracking that fixes an object in space as you move around it is just one line of code (!!!). So ARKit gave us the ability to place objects into the world and realistic positions, but the bulk of the development behind this went into giving objects physics, making sure they collided, and that they were in the right position.

Final result?

So what’s the catch?

Working with ARKit presented several new hurdles to deal with. Debugging and testing with this app definitely felt strange. You frequently had to look up at the ceiling or back up and walk in a giant circle just to find where in space you have placed your 3D model. Getting sizes and perspectives correct was a challenge. A model might appear way up in the sky because at it current scale because it is the size of a cruise ship, but looking at it from your current perspective it looks to be the size of a car.

Another issue to wrap your head around is that the camera is the origin. If you decide to place something in space using fixed coordinates it will be relative to where you are holding your camera. Make it Rain had an invisible plane one meter down from the camera to catch anything that fell off of the edge of the floor plane and remove it from the scene. When I was testing the app sitting down, the raining objects were hitting the ground and piling up, but when I stood up, the invisible plane would rise above the floor’s plane and make the objects disappear before they touched the ground. This was a weird one that definitely tripped us up.

Beyond weird 3D space issues, there was the issue of finding good 3D models to use in the project. Finding free models that are in a format compatible with SceneKit, available for personal use, and that don’t look like crap is pretty difficult. SceneKit relies on Collada or .Dae files which aren’t particularly common. There were a few good sites out in the interwebs but Turbosquid was probably the best for finding models. That said, many ARKit developers dip into Unity for their models.

All in all this was an exciting project to work on and put out there. It is always enjoyable to explore new software. Especially one that lends itself to creating interesting experiences such as ARKit does. The current version not only rains money, but now has the exciting additions of sharks and stars.

We have a bit more polishing to do before public release alongside iOS 11 this fall, but we’ll be sure to make it available to everyone then. For now you can learn more about coding with ARKit here or here.

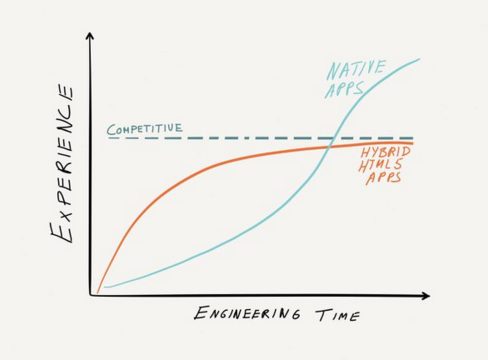

Native apps can work more efficiently because they run directly on the hardware, meaning there’s very little overhead to performing computation. This can make a huge difference when doing lots of graphical work or anything that is computationally expensive. While hardware-targeting makes applications less portable, it allows them to really take advantage of the processors they run on, giving you the high resolution and fluid graphics you see in native games and media apps today. Apple’s graphics library

Native apps can work more efficiently because they run directly on the hardware, meaning there’s very little overhead to performing computation. This can make a huge difference when doing lots of graphical work or anything that is computationally expensive. While hardware-targeting makes applications less portable, it allows them to really take advantage of the processors they run on, giving you the high resolution and fluid graphics you see in native games and media apps today. Apple’s graphics library